- Lumiera

- Posts

- 🔆 Fake Me Not Pt. 2: Explicit Deepfakes

🔆 Fake Me Not Pt. 2: Explicit Deepfakes

South Korea's way to deal with this widespread issue, a new global agreement on our digital future, and more.

🗞️ Issue 37 // ⏱️ Read Time: 7 min

Hello 👋

We’ve talked about deepfakes before, but we’ve only briefly touched upon one of the most widespread and problematic uses of deepfakes: Pornography.

In this week's newsletter

What we’re talking about: The rise of AI-generated deepfake pornography and its implications for privacy, consent, and online safety.

How it’s relevant: Deepfake technology is becoming increasingly accessible, blurring the lines between reality and fabrication in digital content, and affecting people of all ages and backgrounds.

Why it matters: The unchecked spread of deepfake pornography threatens to erode trust in digital media, exacerbate gender-based violence, and fundamentally alter our concept of personal identity in the digital age. As this technology evolves, it challenges our legal systems, social norms, and individual rights in unprecedented ways.

Big tech news of the week…

⚖️ Together with Zambia, Sweden led negotiations on the Global Digital Compact, adopted last week as part of the Pact for the Future. It became the first comprehensive agreement within the UN that addresses digital issues, including AI.

⛽ Constellation Energy Corp. announced it has signed a 20-year deal to supply Microsoft Corporation with nuclear power to fuel the company’s AI operations by reopening Three Mile Island, the site of the worst accident at a U.S. commercial nuclear power plant in American history.

🌏 Sony Research and AI Singapore (AISG) will collaborate on research for the SEA-LION family of large language models (LLMs). SEA-LION, which stands for Southeast Asian Languages In One Network, aims to improve the accuracy and capability of AI models when processing languages from the region.

Check your messages

Imagine you’re scrolling through your phone on your morning commute when a message pops up from a friend:

“Hey, have you seen what’s going around on social media? There’s a video…it looks like you.”

Your heart sinks as your mind races with worst-case scenarios. You know what this might be - a deepfake.

We hope this has never happened to you, but it’s becoming an increasingly common reality for many. Deepfakes don't just affect celebrities or public figures; they can target anyone, from your classmate to your colleague.

The emotional toll of discovering a deepfake of yourself can be immense, especially when the perpetrators are peers. It's a violation of privacy that leaves victims feeling exposed, vulnerable, and often powerless. And even after the content is proven fake, the initial impact can linger, affecting relationships, careers, and mental wellbeing.

Let’s take a deeper look at this very severe issue and what’s being done to combat it.

Understanding Deepfakes

Deepfakes are a form of synthetic media that use artificial intelligence to digitally alter a person’s likeness. This technology can create incredibly realistic depictions of people saying or doing things they never actually did.

To create a deepfake, AI algorithms are trained on datasets, consisting of images or videos of the target person. These algorithms analyze the data, learning the person’s appearance and mannerisms. Once trained, the AI can generate new content that mimics the target, making it difficult to distinguish from real footage. Today, some apps can create convincing deepfakes with just a single photo and a few seconds of audio - something that previously required significant technical expertise.

While this technology is impressive and can be used in non-threatening ways (such as in movie special effects), it also has the potential to be misused. For example, deepfakes can be exploited to create explicit content without the consent or knowledge of the depicted person. In fact, the most prevalent use of deepfakes is in pornography, where a person's face is superimposed onto the body of an adult performer.

The Global Deepfake Crisis

Deepfake technology can facilitate various criminal activities, such as non-consensual pornography and online child sexual exploitation. According to the 2023 State of Deepfakes, the vast majority of deepfake videos are pornography featuring women rather than men, and these videos are often weaponised to intimidate and silence women. Gender-based violence causes significant harm to victims and is considered a security issue. The prevalence of deepfakes also erodes trust in online content and can have far-reaching societal implications.

Despite a general lack of awareness of the issue, some countries have noticed the consequences and started to take action (better late than never, right?). Here’s Team Lumiera’s quick overview of how some nations are dealing with this:

South Korea's Emergency Response 🇰🇷

President Yoon Suk Yeol has called for a thorough investigation and eradication of digital sex crimes, as the country has been hit by a wave of sexually explicit deepfakes, indiscriminately targeting women and girls using their school photos, social media selfies and even military headshots.

The country's media regulator is holding emergency meetings to address the crisis.

Preliminary data indicates that a vast majority of the suspected perpetrators from the recent wave of cases were in their teens. There's a push for better education of young men to build a "healthy media culture."

United States Legislative Efforts 🇺🇸

The "Take It Down Act" proposed by Sen. Ted Cruz aims to hold social media companies accountable for policing and removing deepfake porn.

The “DEFIANCE Act”, which the Senate unanimously passed in July of this year, allows victims to sue creators and distributors of non-consensual deepfakes.

Debate continues over balancing swift action with concerns about technological innovation and free speech.

Global Trends 🌏🌍🌎

Many countries are dealing with outdated laws that don't address the unique challenges of deepfake technology.

The global nature of the internet requires coordinated efforts across borders: There's a growing recognition of the need for international cooperation to combat this borderless issue.

Tech companies are under increasing pressure to develop and implement better detection and removal systems.

How much of a problem is this, really?

We keep reading that the problem is getting more widespread, which is why states are starting to take action. So, what is the actual scale of the problem? Well, it’s quite big. Let’s have a look at the numbers.

In 2023, producers of deepfake porn increased their output by 464% year-over-year

Deepfake pornography accounts for 98% of deepfake videos online. Yes, read that again. Only 2% of videos are other types of deepfakes - the vast majority is deepfake porn.

99% of all deepfake porn features women while only 1% features men

This means that approximately 97% of all deepfake videos online are sexually explicit deepfakes featuring women, many of them non-consensual: Victims range from celebrities like Taylor Swift to politicians like Rep. Alexandria Ocasio-Cortez and high school students

Combatting Deepfake Porn

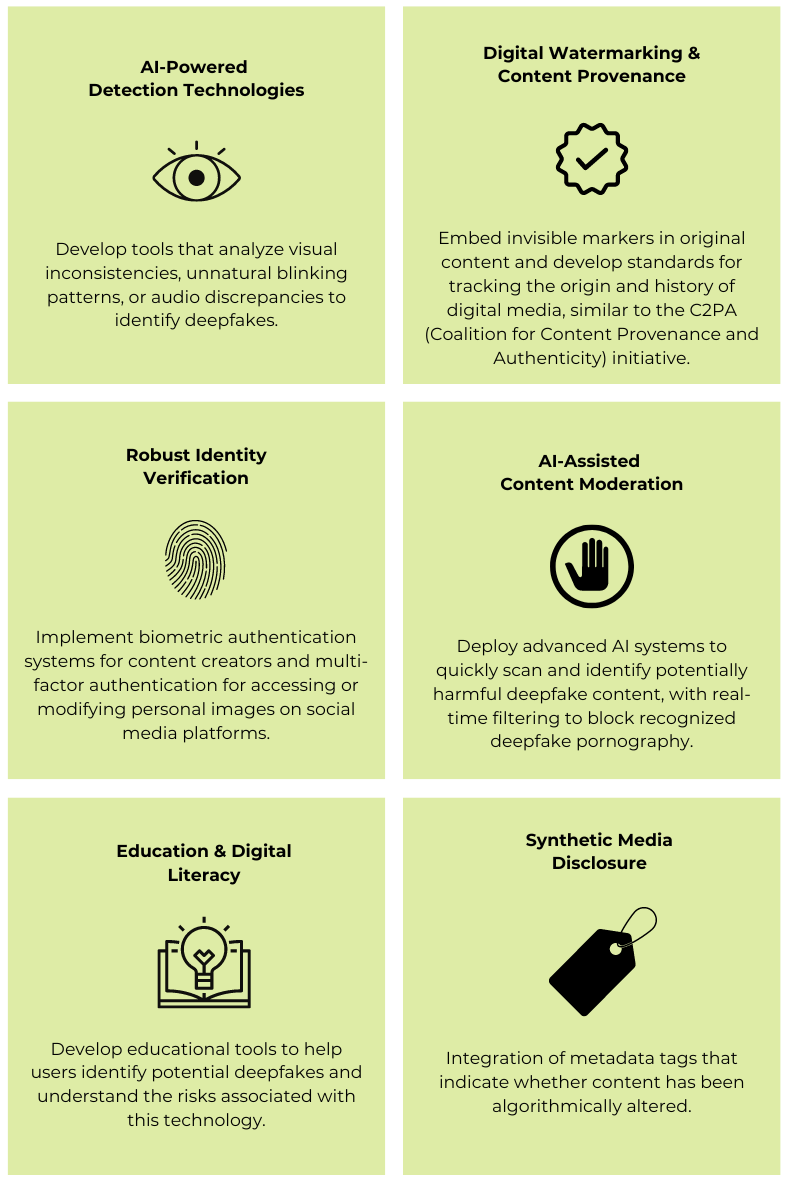

Techniques for creating more convincing deepfakes are constantly improving. Now that we’ve had a closer look at the problem and understand the global impact, let’s go through some of the potential solutions and guardrails:

As deepfake technology advances, we must grapple with new questions about digital consent, identity protection, and the authenticity of online content. Lumiera is committed to helping navigate these complex challenges and their broader impacts. Reach out to us at [email protected] to let us know what’s top of mind for you.

Until next time.

On behalf of Team Lumiera

Lumiera has gathered the brightest people from the technology and policy sectors to give you top-quality advice so you can navigate the new AI Era.

Follow the carefully curated Lumiera podcast playlist to stay informed and challenged on all things AI.