- Lumiera

- Posts

- 🔆 Measuring Responsible AI: TLDR of the World’s 1st Global R-AI Index

🔆 Measuring Responsible AI: TLDR of the World’s 1st Global R-AI Index

Responsible AI world rankings, huge energy demands of AI data centres and Anthropic's fast new model.

🗞️ Issue 24 // ⏱️ Read Time: 6 min

Hello 👋

Have you ever asked yourself how we can recognise or measure responsible AI? The definition and measurement of responsible AI practices have proven to be critical challenges. The Global Index on Responsible AI (GIRAI) addresses these challenges head-on. This multidimensional tool assesses the progress toward responsible AI in 138 countries, using a structured framework of metrics and methodologies. In this week’s newsletter, we break down the key findings and recommendations of the first edition of the Global Index.

In this week's newsletter

What we’re talking about: The first report by the Global Index on Responsible AI (GIRAI). The largest global data collection on responsible AI to-date, this study provides a detailed assessment of responsible AI governance and practices across 138 countries.

How it’s relevant: This groundbreaking tool provides valuable insights into the global state of responsible AI, highlighting both progress and areas for improvement.

Why it matters: By addressing the identified gaps and building on existing strengths, we can work towards an AI ecosystem that is not only technologically advanced but also ethically sound and inclusive.

Big tech news of the week…

🖥️ Anthropic has a fast new AI model: Claude 3.5. It says it can equal or better OpenAI’s GPT-4o or Google’s Gemini at writing and translating code, handling multi-step workflows, interpreting charts and graphs, and is apparently better at understanding humour!

🌍 AI is already wreaking global havoc on Global Power Systems. AI data centres are huge energy black holes, consuming as much energy as 30,000 homes – and their rapid growth is straining global grids. The numbers are astonishing: Sweden could see power demand from data centres roughly double over the course of this decade.

⚽ AI and Euro 2024: VAR is shaking up football. Updates to the technology can help referees to make the toughest calls. The faster the computational power gets, the more you can take massive data sets and render them very quickly. One major application is detecting violations of the offside rule (known as semi-automated offsides).

Measuring Responsible AI

Responsible AI, as defined by GIRAI, refers to the design, development, deployment, and governance of AI in a way that respects and protects all human rights and upholds the principles of AI ethics through every stage of the AI lifecycle and value chain. The first edition of the Global Responsible AI Index was released on June 13th this year and is full of insights, analysis, and recommendations for policymakers, industry leaders, and civil society to collaborate in shaping AI's future.

The index reveals a wide spectrum of AI readiness and responsibility. Countries such as the Netherlands, Germany, Ireland, and the UK are leading the rankings, demonstrating advanced responsible AI ecosystems. Conversely, countries like the Central African Republic, Eritrea, and South Sudan show significant opportunities for improvement in their AI governance and implementation practices.

Who is responsible for the responsible AI?

The GIRAI recognises the importance of government leadership in establishing and implementing frameworks for responsible AI and protecting and promoting human rights in the context of AI. Beyond this, it also assesses the contribution of different non-state actors within responsible AI ecosystems.

The report reveals a complex global landscape where responsible AI practices lag behind the fast-paced development and adoption of AI models. However, it also highlights bright spots in all regions of the world that exemplify how responsible AI practices are being implemented. The key takeaway: responsible AI is possible in all contexts.

What are we measuring?

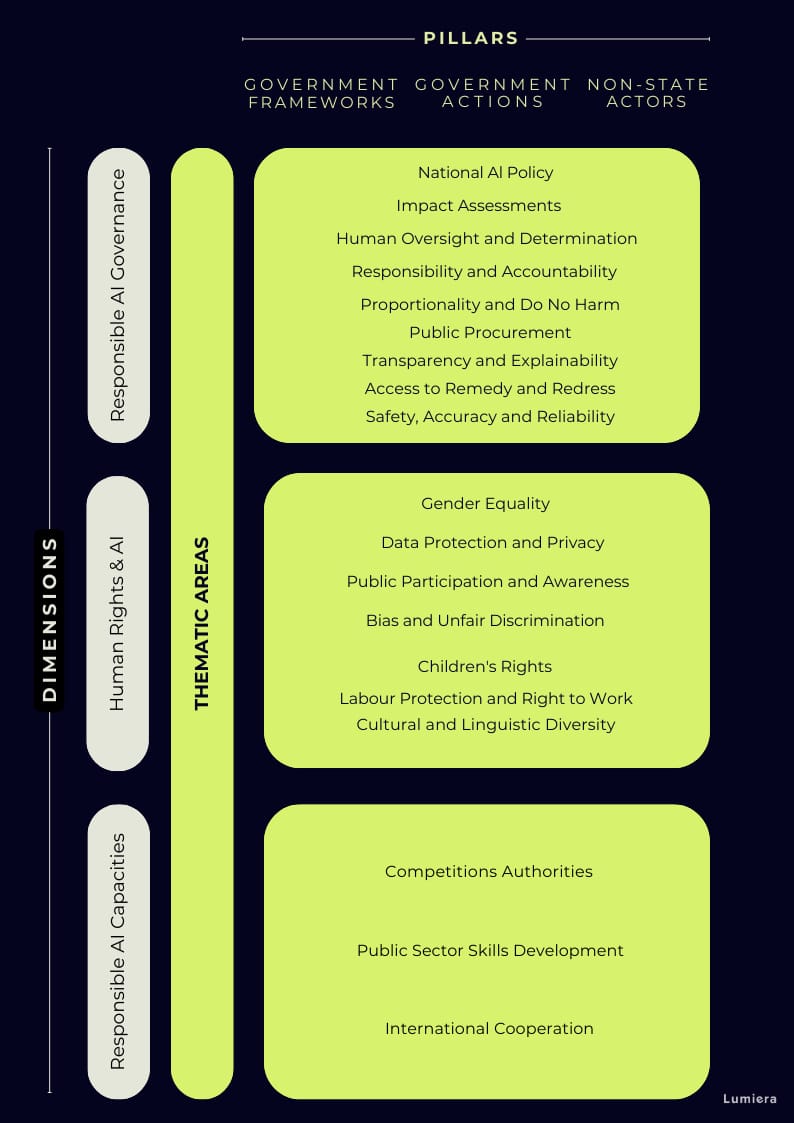

The Global Index on Responsible AI (GIRAI) uses a multifaceted measurement approach to assess each country's AI ecosystem. It covers 19 thematic areas across three dimensions: Human Rights and AI, Responsible AI Governance, and Responsible AI Capacities. The study, conducted between November 2021 and November 2023, involved in-country researchers answering 1,862 questions, with data undergoing rigorous quality assessment.

GIRAI evaluates government leadership and the roles of non-state actors, gathering primary data on government frameworks, government actions, and the enabling environment for non-state actors. This data is then adjusted using secondary sources like the World Bank and Freedom House, incorporating factors such as rule of law, regulatory quality, and freedom of expression. The resulting scores, ranging from 0 to 100, offer a nuanced and comparative view of national efforts to promote responsible AI.

Key Findings

AI Governance Gaps: Many countries have national AI strategies, but there is often a disconnect between policy and practice, and AI governance does not translate into responsible AI. This can leave citizens vulnerable to AI-related risks, such as biased hiring decisions or discriminatory lending practices. Recommendations include adopting specific legally enforceable frameworks that address key areas of AI and human rights.

Human Rights Concerns: Only a fraction of countries have established frameworks for assessing the impact of AI on human rights. The lack of widespread redress mechanisms means there may be limited recourse for individuals harmed by AI systems. To address this, countries should prioritise their actions to improve human rights standards and structures.

Gender Equality Gap: With only a quarter of countries addressing gender equality in AI, there's a risk of perpetuating existing societal biases. AI systems developed without considering gender equality could further disadvantage women in areas like career opportunities or loan approvals.

Justin Vaïsse, Founder and Director General of the Paris Peace Forum

What is important moving forward?

The GIRAI doesn't just highlight challenges, it also offers a roadmap for the future.

Collaboration is Key: The index underscores the importance of international cooperation in advancing responsible AI. Sharing best practices and establishing global standards will be crucial for addressing cross-border challenges.

Empowering All Stakeholders: The index provides tailored recommendations based on a country's score. For example, high-scoring countries can lead in international collaboration, while low-scoring countries can focus on empowering civil society to participate in AI discussions.

Focus on Inclusion: Efforts are needed to ensure AI benefits all of humanity. Specific policies are required to address the potential impact of AI on marginalised groups like Indigenous populations and people with disabilities.

Until next time.

On behalf of Team Lumiera

Lumiera has gathered the brightest people from the technology and policy sectors to give you top-quality advice so you can navigate the new AI Era.

Follow the carefully curated Lumiera podcast playlist to stay informed and challenged on all things AI.