- Lumiera

- Posts

- 🔆 When Machines Become Our Digital Bouncers: AI in Content Moderation

🔆 When Machines Become Our Digital Bouncers: AI in Content Moderation

TikTok's latest workforce shift, US national security, and wildlife conservation.

🗞️ Issue 42 // ⏱️ Read Time: 5 min

Hello 👋

Dog and cat videos, vacation photos, heated political debates. This is some of the content you see on social media. What about all the content you don’t see? Behind every social feed is an invisible army of content moderators, both human and AI, working to keep the digital world from descending into chaos. It's time to pull back the curtain on how content moderation really works.

In this week's newsletter

What we’re talking about: The massive scale of content moderation happening across social media platforms, who's doing it (humans and AI), and how these decisions shape our online experiences.

How it’s relevant: With platforms making millions of moderation decisions in a single day, understanding who makes these calls and how they're made is crucial for anyone who uses social media - which is pretty much all of us.

Why it matters: Understanding the scale and mechanics of content moderation helps us grasp how platforms are balancing automation with human oversight, and what that means for workers, users, and society at large.

Big tech news of the week…

🇺🇲 US President Biden issued the nation’s first-ever National Security Memorandum (NSM) on AI, founded on the premise that cutting-edge AI developments will substantially impact national security and foreign policy in the immediate future.

🌍 Google announced a $5.8 million commitment to support AI skilling and education across Sub-Saharan Africa. The funding will equip workers and students with foundational AI and cybersecurity skills and support nonprofit leaders and the public sector with foundational AI skills.

🦁 In a significant development for wildlife conservation, AI-based trail cameras installed by WWF-Pakistan in the Gilgit-Baltistan region have successfully reduced conflicts between local communities and endangered snow leopards.

The AI Revolution in Content Moderation

TikTok, the global social media giant, recently announced a significant shift in its content moderation strategy. The company is laying off hundreds of human moderators, including a large number in Malaysia, as it pivots towards greater use of AI in content moderation. This move signals a pivotal moment in the evolution of social media content management and reflects a broader trend in the tech industry. TikTok promises better efficiency and consistency with AI moderation. Let’s have a closer look at what this really means.

Content moderation is the critical process of reviewing and managing user-generated content (UGC) on digital platforms to maintain a safe, positive online environment. By screening for and removing harmful, illegal, or inappropriate content like hate speech and graphic material, moderation serves multiple essential purposes: enforcing community standards, protecting users, maintaining brand reputation, and ensuring legal compliance - all while fostering healthy user engagement.

Under fairly recent EU regulations, major online platforms must report their content moderation decisions daily to the DSA Transparency Database. Since September 2023, they've logged over 735 billion content decisions. In a single day, moderators make millions of decisions about what content stays, what goes, and what gets limited visibility on your feed.

The scale of this task is mind-boggling. Each of us generates about 102 MB of data every minute - that's like taking 40-50 photos on your smartphone every sixty seconds. Now multiply that by billions of social media users worldwide. To handle this massive volume of user-generated content, platforms have increasingly turned to hybrid moderation approaches that combine artificial intelligence with human oversight. This evolution has spurred rapid growth in the AI-powered content moderation market, which is projected to reach $14 billion by 2029.

Facebook's moderation system provides insight into how major platforms address content moderation: Approximately 99% of flagged content is identified by their AI systems, with human moderators handling the remaining 1%—usually the most complex or nuanced cases that require careful judgment. This division of labor highlights the industry's broader trend of combining AI's scalability with human expertise for more “challenging” content decisions.

So what does “challenging” content look like? Imagine spending your workday viewing the worst content the internet has to offer - not just once or twice, but hundreds of times. That's the reality for human moderators who handle the content too complex or disturbing for AI to manage. While technology shields most of us from the internet's darkest corners, these digital “first responders” face a constant barrage of traumatic material that can leave lasting psychological scars.

The cost doesn't stop at psychological impact. Many moderators, often working in developing countries, face punishing quotas and low wages. It's created a troubling dynamic where the mental burden of keeping global social media platforms safe falls disproportionately on workers in lower-income nations - a form of "digital colonialism" that rarely makes headlines. As AI takes over these roles, moderators' hard-earned expertise risks becoming obsolete.

In Malaysia alone, hundreds of moderators are losing their jobs when TikTok is shifting to AI content moderation, families face an uncertain future as AI reshapes their industry. As AI advances, these plans are starting to look increasingly fragile.

What skills will still matter in an AI-driven world?

The Mechanics and Ethics of AI Moderation

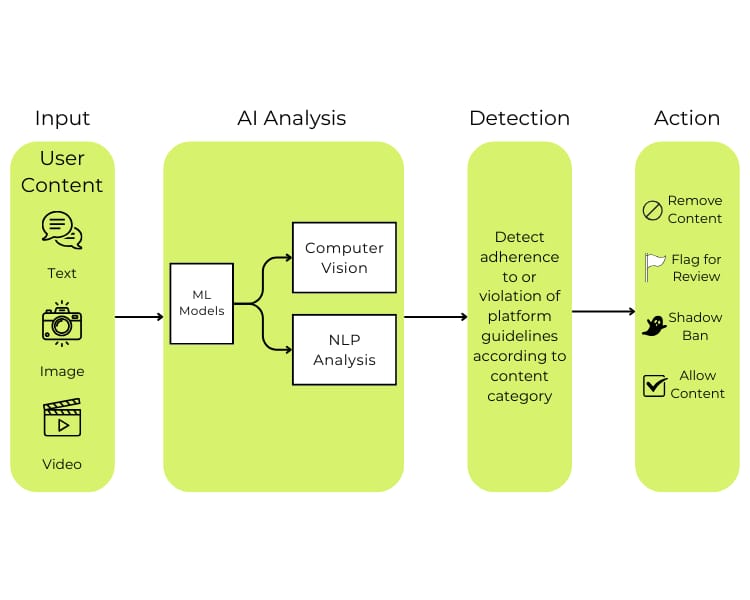

At its core, AI moderation is powered by sophisticated machine learning systems trained on vast datasets of pre-labeled content. These systems act as digital filters, using natural language processing and computer vision to analyze text, images, and videos in real-time. When they detect potentially problematic content - from hate speech to graphic violence - they can automatically remove it (the most common action), flag it for human review, or restrict its visibility. You may have heard of “shadow banning,” a demotion technique that limits content distribution.

Platforms report whether detection was automated and categorize the types of automation used: fully automated, partially automated, or not automated. According to a 2023 report, approximately 68% of all detections across the examined platforms were automated.

While these systems grow more sophisticated with each piece of content they process, they face notable limitations. Think of how a phrase like "you're killing it!" could mean excellent performance in one context but suggest violence in another. This is where AI often falls short - in understanding the subtle nuances that human moderators grasp instinctively.

This technological shift raises critical ethical considerations:

Accuracy and Nuance: The challenge of interpreting content that relies heavily on cultural context, sarcasm, or local expressions.

Bias and Fairness: The risk of perpetuating existing societal biases, as AI systems learn from historical data that may contain prejudiced moderation decisions.

Transparency: The complexity of AI decision-making creates a "black box" effect, making it difficult for users to understand or challenge moderation decisions.

Language and Culture: A Universal Challenge

Whether using AI algorithms or human moderators, one of the most fundamental challenges in content moderation is linguistic and cultural diversity. In Africa alone, there are over 3,000 languages spoken, yet most platforms lack sufficient resources to moderate content in these languages. This isn't just a technical limitation of AI - even human moderation teams often lack moderators with the necessary language skills and cultural context to make nuanced decisions about what constitutes harmful content in different societies.

This challenge is magnified when platforms try to apply universal standards across different cultural contexts. What might be considered offensive content in one culture could be perfectly acceptable in another:

🥗 In many Western countries, it's polite to finish all the food on your plate, while in some Asian cultures like China and Japan, leaving a bit of food shows satisfaction and respect to the host.

👐 Eating with hands is normal and traditional in many South Asian and Middle Eastern cultures, while it might be seen as improper in Western formal settings

👍🏻The thumbs-up gesture is friendly in many Western countries but can be highly offensive in some Middle Eastern countries

🇦🇺 Words or phrases that are slurs in one language might be innocent in another.

The meaning of words may vary depending on the country you are in.

Without a deep understanding of local languages and cultural nuances, both AI systems and human moderators risk either missing truly harmful content or incorrectly flagging legitimate speech.

While AI can help scale content moderation efforts, it typically performs best in languages with abundant training data, like English. For many other languages and cultural contexts, the technology may lack the sophistication to understand subtle cultural references, idioms, or context-dependent meanings that could determine whether content is harmful or not.

Looking Ahead: Balancing Innovation and Responsibility

The future of content moderation isn't just about technological advancement - it's about finding the right balance between innovation and responsible implementation. As platforms continue to embrace AI solutions, several priorities emerge:

Ethical AI Development: The development of AI moderation systems must be guided by robust ethical frameworks that actively address bias and protect user rights.

Global Digital Ethics: As content moderation evolves, we need clear international standards that protect digital workers. This is particularly crucial for roles involving exposure to traumatic content, where psychological well-being must be prioritized alongside operational efficiency.

Transparency and Accountability: For AI moderation to maintain public trust, platforms must commit to greater transparency about their processes and establish clear mechanisms for accountability when systems make mistakes.

The success of AI in content moderation won't just be measured by its technical capabilities, but by how well it serves and protects both users and moderators alike.

Until next time.

On behalf of Team Lumiera

Lumiera has gathered the brightest people from the technology and policy sectors to give you top-quality advice so you can navigate the new AI Era.

Follow the carefully curated Lumiera podcast playlist to stay informed and challenged on all things AI.